High-performance, Efficiency and Scalability

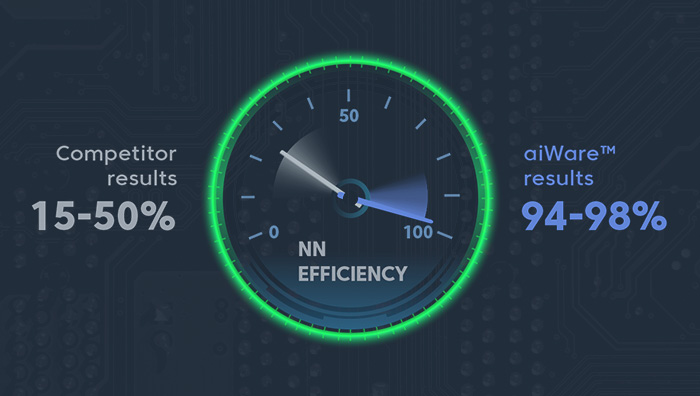

The aiWare hardware IP Core delivers high performance at low power, thanks to its class-leading high efficiency and highly optimized hardware architecture. With up to 256 Effective TOPS per core (not just claimed TOPS), aiWare is highly scalable, so can be used in applications requiring anything from 1-2 TOPS up to 1024 TOPS using multi-core. Thanks to a design focused on optimizing every data transfer every clock cycle, aiWare makes full use of highly distributed on-chip local memory plus dense on-chip RAM to keep data on-chip as much as possible, minimizing or eliminating external memory traffic. Innovative dataflow design ensures external memory bandwidth is minimized while enabling high efficiency regardless of input data size or NN depth.

&imagePreview=1

&imagePreview=1

&imagePreview=1

&imagePreview=1

&imagePreview=1

&imagePreview=1

&imagePreview=1

&imagePreview=1

&imagePreview=1

&imagePreview=1